Appsumo Reveals its A/B Testing Secret: Only 1 Out of 8 Tests Produce Results

This is the 2nd article in the series of interviews and guest posts we are doing on this blog regarding A/B testing and conversion rate optimization. In the first article, we interviewed Oli from Unbounce on Landing Pages Best Practices.

Editor’s note: This guest post is written by Noah Kagan, founder of web app deals website Appsumo. I have known Noah for quite some time and he is the go-to person for any kind of marketing or product management challenges. You can follow him on Twitter @noahkagan. In the article below Noah shares some of the A/B testing secrets and realities that he discovered after doing hundreds of tests on Appsumo.

Download Free: A/B Testing Guide

Only 1 out of 8 A/B tests have driven significant change

AppSumo.com reaches around 5,000 visitors a day. A/B testing has given us some dramatic gains such as increasing our email conversion over 5x and doubling our purchase conversion rate.

However, I wanted to share some harsh realities about our testing experiences. I hope sharing this helps encourage you not to give up on testing and get the most out of it. Here’s a data point that will most likely surprise you:

Only 1 out of 8 A/B tests have driven significant change.

That’s preposterous. Not just a great vocab word but a harsh reality. Here are a few tests from us that I was SURE would produce amazing results only to disappoint us later.

A/B test #FAIL 1

Hypothesis: Title testing. We get a lot of traffic to our landing page and having a more clear message will significantly increase conversions.

Result: Not-conclusive. We’ve tried over 8 versions and so far not one has produced any significant improvement.

Why it failed: People don’t read. (Note: the real answer here is “I don’t know why it didn’t work out, that’s why I’m doing AB testing”)

Suggestion: We need more drastic changes to our page like showing more info about our deals or pictures to encourage a better conversion rate.

A/B test #FAIL 2

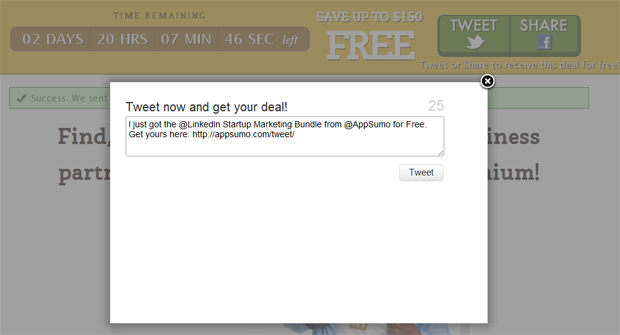

Hypothesis: Having a tweet for a discount pop-up in a light-box vs someone having to click a button to tweet. Assumed reducing a click and putting it (annoyingly) in front of someone’s face will encourage more tweets.

Result: 10% decrease with light-box version.

Why it failed: ANNOYING. Totally agree. Also, it was premature as people had no idea about it nor were interested in tweeting at that moment.

Suggestion: Better integrate people’s desire to share into our site design.

A/B test #FAIL 3

Hypothesis: A discount would encourage more people to give us their email on our landing page.

Result: Fail. Decreased conversion to email on our landing page.

Why it failed: An email is a precious resource and we are dealing with sophisticated users. Unless you are familiar with our brand which is a small audience then you aren’t super excited to trade your email for % off.

Suggestion: Give away $ instead of % off. Also, offer the % off with examples of deals so they can see what they could use it for.

Explore: A/B Testing Tools

Thoughts on failed A/B tests

All of these were a huge surprise and a disappointment for me.

How many times have you said, “This experience is 100x better, I can’t wait to see how much it beats the original version?”

A few days later you check your testing dashboard to see it actually LOSING.

Word of caution. Be aware of premature e-finalization. Don’t end tests before data is finalized (aka statistically significant).

I learned the majority of my testing philosophy at SpeedDate where literally every change is tested and measured. SO MANY times my tests initially blew the original version away only to find out a few days later that a) the improvement wasn’t as amazing after all or b) it actually lost.

How can you get the most out of your tests?

Some A/B testing tips based on my experience:

- Weekly iterations. This is the most effective way I’ve found to do A/B testing.

- Pick only 1 thing you want to improve. Let’s say it’s conversion rate to buying on the first-time visitors

- Get a benchmark of what that conversion rate is

- Do 1-3 tests per week to increase that

- Do it every week until you hit some internal goal you’ve set for yourself

- Most people test 80 different things instead of 1 priority over and over. It simplifies your life.

- Patience. Realize to get results it may take a few thousand visits or 2 weeks. Pick bigger changes to test so you aren’t waiting around for small improvements.

- Persistence. Knowing that 7 out of 8 of your tests will produce insignificant improvements should comfort you that you aren’t doing it wrong. That’s just how it is. How badly do you want those improvements? Stick with it.

- Focus on the big. I say this way too much but you still won’t listen. Some will and they’ll see big results from this. If you have to wait 3-14 days for your A/B tests to finish then you’d rather have dramatic changes like -50% or 200% than a 1-2% change. This may depend on where you are in your business but likely you aren’t Amazon so 1% improvements won’t make you a few million dollars more.

If you like this article follow @appsumo for more details and check out Appsumo.com for fun deals.

Editor’s note: Hope you liked the guest post. It is true that many A/B tests produce insignificant results and that’s precisely the reason that you should be doing A/B testing all the time. For the next articles in this series, if you know someone whom I can interview or want to contribute a guest post yourself, please get in touch with me ([email protected]).